Beyond the Plateau Narrative: Our Perspective on AI Model Development

Following the mixed reception of ChatGPT 5 and growing speculation about an AI development plateau, we wanted to share our analysis of the current state and trajectory of AI model capabilities.

Our Position

We remain bullish on continued AI advancement. The evidence suggests we are still early in model development, and the enterprise value creation curve is beginning to accelerate rather than flatten.

OpenAI GPT-5 Announcement

The Evolution of Model Intelligence

The foundation of current AI capabilities still rests primarily on pre-training with text data followed by human preference optimization. However, three significant developments are expanding the frontier of what's possible:

1. Self-Supervised Problem Solving

DeepSeek recently demonstrated that models can improve themselves by generating problems, attempting solutions, and verifying results—creating an autonomous improvement cycle. This approach has yielded substantial gains in mathematics, coding, and other domains with well-defined problem spaces and verifiable solutions.

This represents a shift from purely human-supervised learning to models that can identify and learn from their own mistakes without human intervention.

2. Dynamic Computational Allocation

The latest generation of models—including releases from DeepSeek, Grok, Gemini Deep Think, Claude, and ChatGPT 5—introduces variable computational spending based on problem complexity.

Traditional models apply fixed computation to every query, regardless of difficulty. New "thinking" models dynamically allocate computational resources: minimal for simple queries, extensive for complex problems. This is achieved through reinforcement learning that rewards successful problem-solving strategies.

3. Learning Through Interaction and Simulation

Models are beginning to move beyond static training data into environments where they can act, experiment, and learn from feedback. This includes advances in world foundation models—from DeepMind's Genie 3 to NVIDIA's Cosmos—that simulate rich, physics-consistent environments in real time. Such interactive settings allow AI to develop causal reasoning, spatial understanding, and planning skills essential for robotics, autonomous systems, and other embodied tasks.

The same principle applies to other domains: code execution sandboxes, economic simulations, and synthetic data labs all give models the ability to test hypotheses, observe outcomes, and refine strategies. This shift expands the problem space from symbolic reasoning over text to embodied and experiential intelligence, where progress is driven by continuous interaction with dynamic systems.

Emergent behaviors include:

- Hypothesis formation and testing

- Error recognition and strategic backtracking

- Problem decomposition into manageable components

- Integration of multiple solution approaches

- Tool utilization when beneficial

- Spatial reasoning and navigation in dynamic environments

- Prediction of physical outcomes based on learned world dynamics

These capabilities emerge from training rather than explicit programming, suggesting significant room for continued improvement.

Case Study: International Mathematical Olympiad Performance

The International Mathematical Olympiad provides a concrete benchmark for measuring progress:

Google DeepMind's specialized system achieved Silver Medal performance, requiring 60 hours versus the allowed 4.5 hours, using tools unavailable to human competitors.

Public AI models tested on IMO problems failed to achieve Bronze Medal level.

Both OpenAI and Google DeepMind announced Gold Medal performance under standard competition conditions—no special tools, standard time limits, identical problem format, single submission.

This progression from specialized systems requiring 60 hours to general models succeeding under human constraints within one year indicates acceleration, not plateauing.

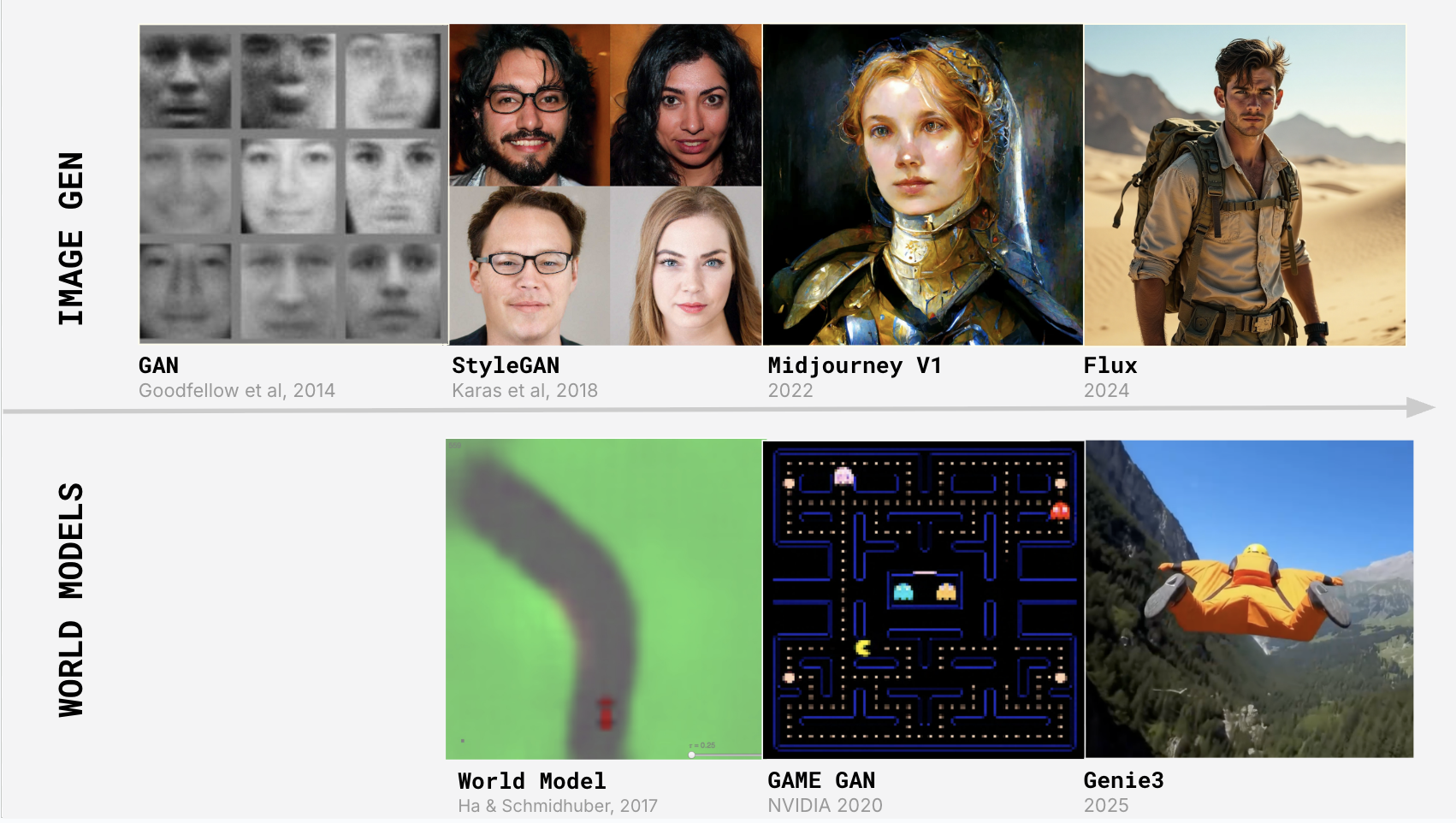

Case Study: Evolution of World Models

The rapid progress in world models shows that AI capability growth is far from plateauing—extending beyond reasoning into high-fidelity simulation.

World Models (Ha & Schmidhuber) showed that a neural network could learn a compact latent-space simulation of an environment and train an agent entirely inside this "dream" before transferring it to the real game.

NVIDIA's GameGAN learned to simulate PAC-MAN purely from gameplay videos—no game engine—producing fully playable, interactive worlds via a GAN.

Genie 1 marked the shift toward foundation world models, trained on large-scale internet video to generate diverse, controllable 2D game worlds.

GameNGen applied diffusion models to real-time simulation, achieving long-horizon, high-fidelity interactive environments with learned physics.

Genie 3 delivered real-time, text-to-3D interactive worlds that are physics-consistent at 24 FPS, allowing free navigation and object interaction. This enabled applications from game prototyping to embodied agent training in realistic, simulated settings. NVIDIA's Cosmos targeted robotics and autonomous driving—simulating photorealistic, physics-consistent worlds to train policies for self-driving cars and robots with direct sim-to-real transfer.

Google Genie 3: A New Frontier for World Models

This progression from small-scale research demos to foundation models capable of rendering, simulating, and enforcing physics in real time within just a few years points to acceleration, not plateauing. The curve in simulation mirrors the leap in image generation, from blurry GAN outputs to photorealism, suggesting that we are still at the beginning of an equally steep growth phase.

AI model progression: Image generation from GAN (2014) to Flux (2024) and World models from World Model (2017) to Genie3 (2025)

Areas of Active Development

Enhanced Reasoning Capabilities

We anticipate improvements in:

- Problem complexity assessment and appropriate resource allocation

- Strategic planning and approach selection

- Systematic problem decomposition

- Intelligent backtracking from failed solution paths

- Integration of external tools (code execution, search, databases) into reasoning processes

Long-Term Memory and Continuous Learning

Most models still work in isolation, with no lasting awareness of prior interactions. Early persistent-memory systems—such as ChatGPT's memory feature, rolled out to users in 2024–2025—store facts, preferences, and history outside the core model and retrieve them when relevant.

True progress will require richer, structured memories that capture more than can fit in a text context, enabling models to build on experience over weeks, months, and years.

Leveraging User Interactions

Current models' ability to incorporate usage data for improvement is still limited. There is significant headroom for real-time "active learning" that is contextual and personalized.

Architectural Innovation

The transformer architecture, while successful, is unlikely to be optimal. Research into alternative architectures, active learning systems, and improved memory mechanisms could yield substantial efficiency and capability gains.

In a recent paper, Chinese researchers fed all LLM research into a model, which discovered 106 novel AI model architectures that converge to lower loss with better benchmarks.

Enterprise Implications: From Theory to Accelerated Adoption

These improvements are driving accelerated enterprise adoption, particularly for complex use cases leveraging advanced reasoning.

Key examples:

- Software development (AI-assisted coding, debugging, refactoring, architecture decisions)

- Quality-cost optimization (allocating reasoning resources to high-stakes decisions)

- Closed-loop autonomous agents (reason, call tools, verify outputs, act, log results, learn from outcomes)

- Pre-production simulation (model rare/high-risk scenarios before production impact)

The shift from simple automation to reasoning-enabled complex problem solving represents a step-function change in what enterprises can delegate to AI systems.

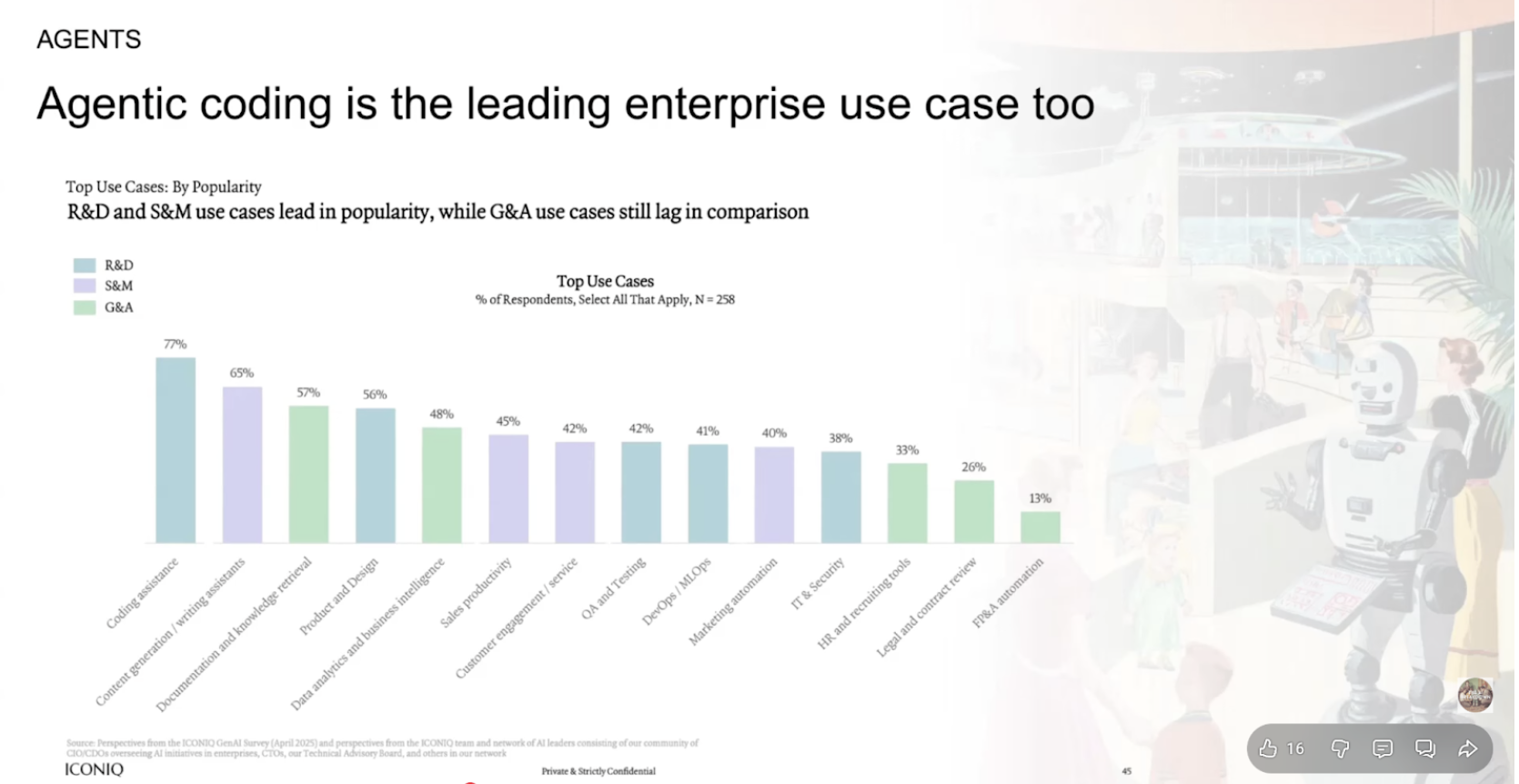

Agentic coding is the leading enterprise use case

Source: ICONIQ GenAI Survey (April 2025)

Enterprise agent deployments 3x'd in Q2

Source: ICONIQ GenAI Survey (April 2025)

Investment Perspective

We remain bullish on AI's continued advancement and its unique ability to create "why now" moments.

Our investment thesis:

- Proprietary data advantages

- Domain expertise

- Solving complex, high-value problems

We avoid investments in applications competing primarily on AI-powered process automation, as these face inevitable margin compression.

Conclusion

The AI plateau narrative misses the larger picture. We're witnessing continuous improvement in complex reasoning, tool use, and autonomous problem-solving.

The transformative potential of AI is real and accelerating—the key is distinguishing between businesses riding the wave and those building lasting value.

Ready to Build the Future?

Are you building an AI company with defensible advantages? We want to hear from you.

Send Us Your Deck